This post covers quick and dirty TCP load balancing with HAProxy, and some specific instructions for Graylog2.

(As an aside, if you’re looking for a gem that can log Rails applications to Graylog2, the current official gelf-rb gem only supports UDP. I’ve forked the repo and merged @zsprackett’s pull request in, which adds TCP support by adding protocol: GELF::Protocol::TCP as an option. I’ll remove this message when the official maintainer for gelf-rb merges @zsprackett’s pull request in.)

Technical context: Ubuntu 14.04, CentOS 7

1. Install HAProxy

On Ubuntu 14.04:

$ apt-add-repository ppa:vbernat/haproxy-1.5

$ apt-get update

$ apt-get install haproxy

On CentOS 7:

# HAProxy has been included as part of CentOS since 6.4, so you can simply do

$ yum install haproxy

2. Configure HAProxy

You’ll probably need root privileges to configure HAProxy:

$ vim /etc/haproxy/haproxy.cfg

There will be a whole bunch of default configuration settings. You can delete those that are not relevant to you, but there’s no need to at this moment if you just need to get started.

Simply append to the file the settings that we need:

listen graylog :12203

mode tcp

option tcplog

balance roundrobin

server graylog1 123.12.32.127:12202 check

server graylog2 121.151.12.67:12202 check

server graylog3 183.222.32.27:12202 checkThis directive block named graylog tells HAProxy to:

- Listen on port 12203 - you can change this if you want

- Operate in TCP (layer 4) mode

- Enable TCP logging (more info here)

- Use round robin load balancing, in which servers are distributed connections in turn. You can even specify weights for different servers with different hardware configurations. More on the different load balancing algorithms that HAProxy supports here

- Proxy requests to these three backend Graylog2 servers through port 12202, and check their health periodically

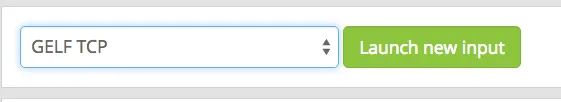

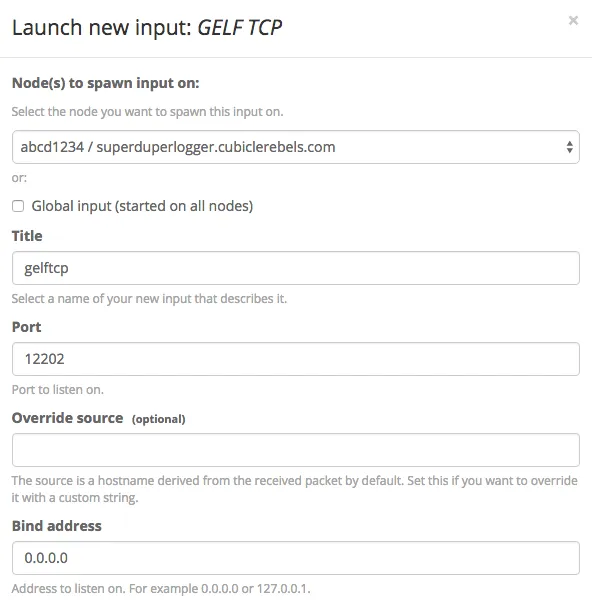

3. Create a TCP input on Graylog2

Creating a TCP input on Graylog2 through the web interface is trivial. We’ll use port 12202 here as an example:

3. Start HAProxy

$ service haproxy start

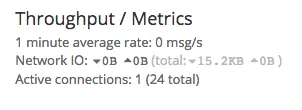

You can test if HAProxy is proxying the requests successfully by sending TCP packets through to HAProxy and checking the number of active connections on Graylog2’s input page.

# assuming 123.41.61.87 is the IP of the machine running HAProxy

# run this on your dev machine

$ nc 123.41.61.87 12203

You should see something like:

4. Change HAProxy’s health check to Graylog2’s REST API

The last thing to do, and really, the only part of HAProxy that’s specific to Graylog2, is to change the way HAProxy checks the health of its backend Graylog2 servers.

Normally, HAProxy defaults to simply establishing a TCP connection.

However, HAProxy accepts a directive called option httpchk, in which HAProxy will send a HTTP request to some specified URL and check for the status of the response. 2xx and 3xx responses are good, anything else is bad.

For Graylog2, they’ve exposed a REST API for the express purpose of allowing load balancers like HAProxy to check its health:

The status knows two different states,

ALIVEandDEAD, which is also thetext/plainresponse of the resource. Additionally, the same information is reflected in the HTTP status codes: If the state isALIVEthe return code will be200OK, forDEADit will be503Service unavailable. This is done to make it easier to configure a wide range of load balancer types and vendors to be able to react to the status.

The REST API is open on port 12900 by default, so you can try the endpoint out:

# the IP address of one of our Graylog2 servers

$ curl http://123.12.32.127:12900/system/lbstatus

ALIVE

(The web interface also exposes the full suite of endpoints that the REST API provides, which you can access by System > Nodes > API Browser)

With that, we can indicate in the HAProxy configuration that we want to use Graylog2’s health endpoint:

listen graylog :12203

mode tcp

option tcplog

balance roundrobin

option httpchk GET /system/lbstatus

server graylog1 123.12.32.127:12202 check port 12900

server graylog2 121.151.12.67:12202 check port 12900

server graylog3 183.222.32.27:12202 check port 12900#Parting Notes

Right now, we have HAProxy installed on one instance that load balances requests between multiple instances running Graylog2. However, there’s still a single point of failure (if HAProxy goes down).

Ideally, the best way to set up what is commonly called a high availability cluster would be to set up several HAProxy nodes, then employ Virtual Router Redundancy Protocol (VRRP). Under VRRP, there is an active HAProxy node and one or more passive HAProxy nodes. All of the HAProxy nodes share a single floating IP. The passive HAProxy nodes will ping the active HAProxy node periodically. If the active HAProxy goes down, the passive HAProxy nodes will elect the next active HAProxy node amongst themselves to take over the floating IP. Keepalived is a popular solution for implementing VRRP.

Sadly, VPSes such as Digital Ocean do not support multiple IPs per instance, making Keepalived and VRRP impossible to implement (there’s a open suggestion on DO where many users are asking for this feature). To mitigate this issue somewhat, we’ve used Monit to monitor and automatically reboot HAProxy if it goes down. It’s not foolproof, and we’ll be on the lookout to improve this setup.